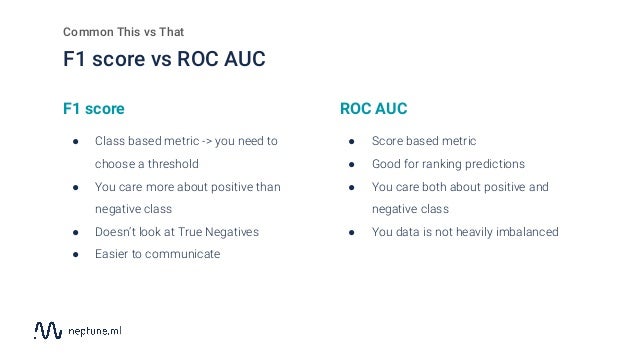

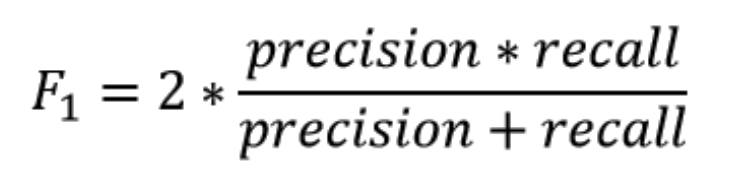

Aug 28, 2019 · view f1 score by threshold for the best experiment. This alters 'macro' to account for label imbalance; From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55. F1 score is the combination of both precision and recall score. Jul 26, 2020 · documentation update. Reading list the following is an interesting article on the common binary classification metric by neptune.ai. If these values are close to 1 this means model will be a good predicted model. For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall. The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at. This documentation has been moved here.

Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold. Dec 10, 2019 · in the pregnancy example, f1 score = 2* ( 0.857 * 0.75)/(0.857 + 0.75) = 0.799. Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score). As you can see, getting the threshold just right can actually improve your. This alters 'macro' to account for label imbalance; Reading list the following is an interesting article on the common binary classification metric by neptune.ai. From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55. Good thing is, you can find a sweet spot for f1metric.

Jul 26, 2020 · documentation update.

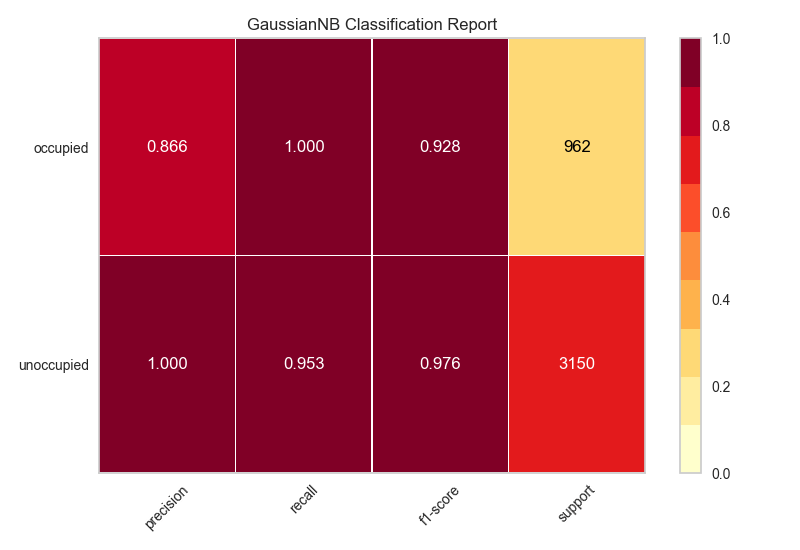

For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall. However, in cases where we want to find an optimal blend of precision and recall, we can combine the two metrics using the f1 score. Jul 26, 2020 · documentation update. As you can see, getting the threshold just right can actually improve your. Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score). This alters 'macro' to account for label imbalance; Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold. Aug 28, 2019 · view f1 score by threshold for the best experiment. Due to this threshold value selection we can use another evaluation metric which combines both these metrics (precision and recall). We can adjust the threshold to optimize f1 score. Dec 10, 2019 · in the pregnancy example, f1 score = 2* ( 0.857 * 0.75)/(0.857 + 0.75) = 0.799. If these values are close to 1 this means model will be a good predicted model.

Jul 26, 2020 · documentation update. Aug 06, 2020 · both precision and recall values range between 0 to 1. Dec 10, 2019 · in the pregnancy example, f1 score = 2* ( 0.857 * 0.75)/(0.857 + 0.75) = 0.799. Good thing is, you can find a sweet spot for f1metric. For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall. If these values are close to 1 this means model will be a good predicted model. Aug 28, 2019 · view f1 score by threshold for the best experiment. F1 score is the combination of both precision and recall score. The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at.

Dec 10, 2019 · in the pregnancy example, f1 score = 2* ( 0.857 * 0.75)/(0.857 + 0.75) = 0.799.

The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at. For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall. Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold. Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score). Reading list the following is an interesting article on the common binary classification metric by neptune.ai. F1 score is the combination of both precision and recall score. Jul 26, 2020 · documentation update. Due to this threshold value selection we can use another evaluation metric which combines both these metrics (precision and recall). We can adjust the threshold to optimize f1 score. In the case of precision, recall, f1, and friends). From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55. Good thing is, you can find a sweet spot for f1metric. Aug 06, 2020 · both precision and recall values range between 0 to 1. However, in cases where we want to find an optimal blend of precision and recall, we can combine the two metrics using the f1 score. As you can see, getting the threshold just right can actually improve your.

Aug 06, 2020 · both precision and recall values range between 0 to 1. Good thing is, you can find a sweet spot for f1metric. Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score). However, in cases where we want to find an optimal blend of precision and recall, we can combine the two metrics using the f1 score. This documentation has been moved here. As you can see, getting the threshold just right can actually improve your.

This documentation has been moved here.

Reading list the following is an interesting article on the common binary classification metric by neptune.ai. We can adjust the threshold to optimize f1 score. F1 score is the combination of both precision and recall score. From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55. In the case of precision, recall, f1, and friends). The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at. Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold. Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score). As you can see, getting the threshold just right can actually improve your. If these values are close to 1 this means model will be a good predicted model.

This alters 'macro' to account for label imbalance; f1 classification. Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold.

Aug 06, 2020 · both precision and recall values range between 0 to 1.

This documentation has been moved here.

However, in cases where we want to find an optimal blend of precision and recall, we can combine the two metrics using the f1 score.

The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at.

This documentation has been moved here.

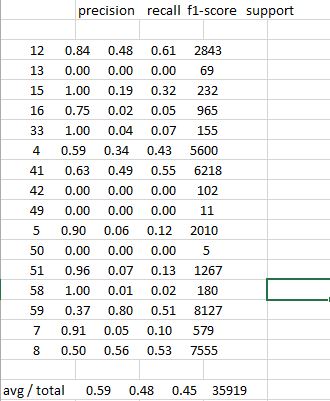

From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55.

From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55.

For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall.

We can adjust the threshold to optimize f1 score.

Aug 28, 2019 · view f1 score by threshold for the best experiment.

Jul 26, 2020 · documentation update.

Notice that for both precision and recall you could get perfect scores by increasing or decreasing the threshold.

This alters 'macro' to account for label imbalance;

Due to this threshold value selection we can use another evaluation metric which combines both these metrics (precision and recall).

For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall.

For example, in the case of a good or bad loan classification, we would like to decrease fn to increase recall.

This alters 'macro' to account for label imbalance;

We can adjust the threshold to optimize f1 score.

Aug 06, 2020 · both precision and recall values range between 0 to 1.

Jul 26, 2020 · documentation update.

Aug 06, 2020 · both precision and recall values range between 0 to 1.

Aug 28, 2019 · view f1 score by threshold for the best experiment.

Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score).

Good thing is, you can find a sweet spot for f1metric.

The f1 score can be interpreted as a weighted average of the precision and recall, where an f1 score reaches its best value at.

This alters 'macro' to account for label imbalance;

Reading list the following is an interesting article on the common binary classification metric by neptune.ai.

Aug 06, 2020 · both precision and recall values range between 0 to 1.

Jul 26, 2020 · documentation update.

Aug 06, 2020 · both precision and recall values range between 0 to 1.

From the table we can compute the global precision to be 3 / 6 = 0.5, the global recall to be 3 / 5 = 0.6, and then a global f1 score of 0.55.

Calculate metrics for each instance, and find their average (only meaningful for multilabel classification where this differs from accuracy_score).